Back To Search

The application is built using ReactJS. When GIFs are uploaded from the browser, it sends the GIFs to Indexify using the ingestion API. The ingestion API can handle uploading 100s of 1000s of GIFs concurrently. Indexify runs extraction processes described below asynchronously and in parallel in a cluster of extractors running AI models. The extracted data, such as visual descriptions, and embedding are automatically stored in the databases.

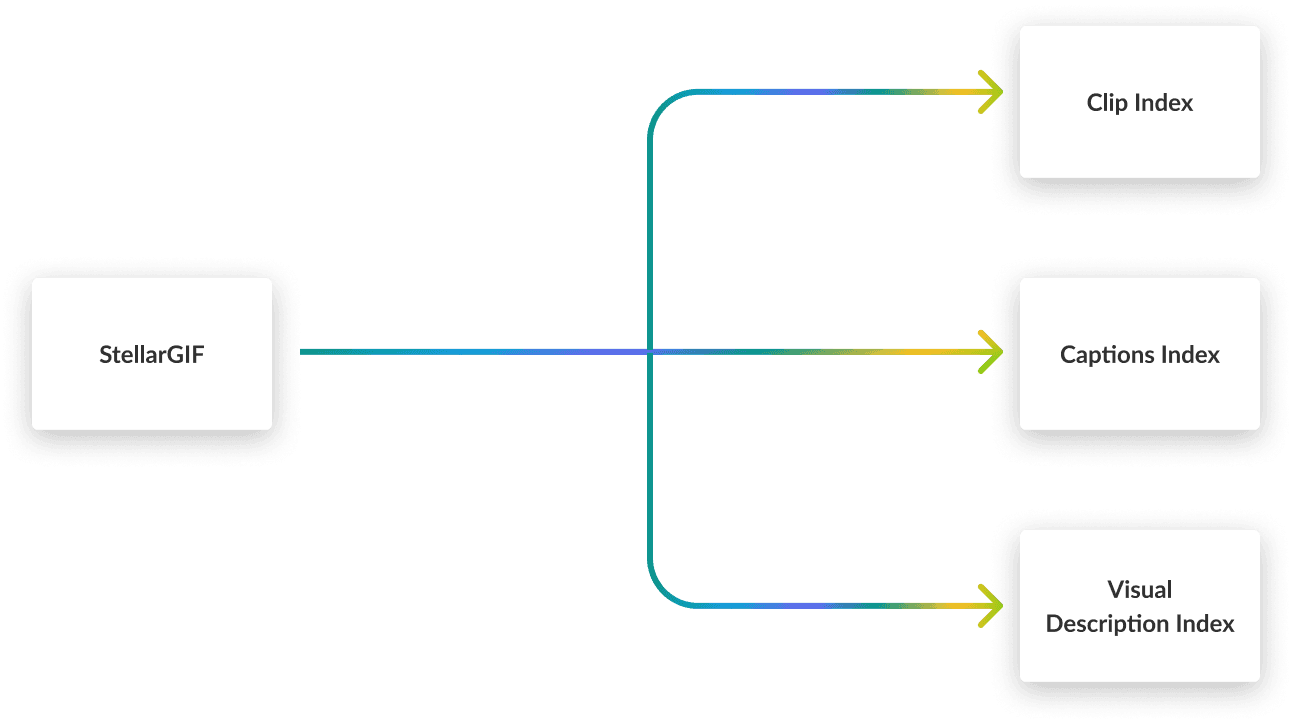

MoonDream's visual understanding model describes every image. This description is then embedded and stored in a vector index.

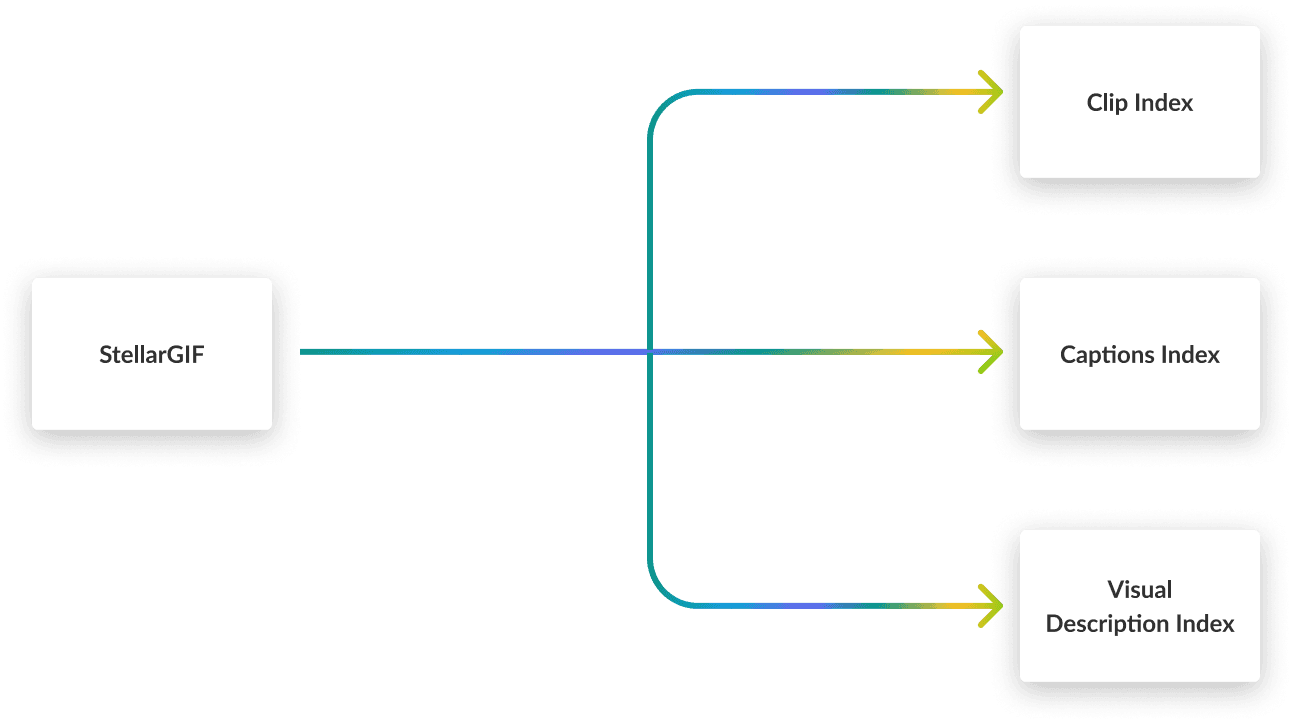

MoonDream's visual understanding model describes every image. This description is then embedded and stored in a vector index.

The human-generated caption is embedded and stored in a vector index.

The human-generated caption is embedded and stored in a vector index.

The image is embedded and stored in a vector index.

The image is embedded and stored in a vector index.

The application queries three different indexes parallely with the search query. It combines the results and then re-ranks them. The results of the re-ranking step are then displayed to the user.

When a user searches gifs with a text, we look up all the different indexes, and fuse the results using Reciprocal Rank Fusion to merge the results into a single list.

When a user searches gifs with a text, we look up all the different indexes, and fuse the results using Reciprocal Rank Fusion to merge the results into a single list.

We re-rank the results once again by asking an LLM to prioritize the results based on the user query.

We re-rank the results once again by asking an LLM to prioritize the results based on the user query.